What Is Edge Computing? A Simple Explanation for Beginners (2025 Guide)

Introduction

Technology is evolving faster than ever, and every year new terms appear that can be confusing—especially for beginners. One of the most talked-about technologies today is Edge Computing. You may have heard this term in discussions about 5G, artificial intelligence, smart devices, or cloud computing, but still wonder what it really means.

This guide is written specifically for beginners. It explains what edge computing is, how it works, why it is important, and how it will shape the future in 2025 and beyond. By the end of this article, you will clearly understand why edge computing is considered one of the most important technologies of the modern digital world.

What Is Edge Computing?

Edge Computing is a technology that processes data closer to where it is generated, instead of sending all data to a centralized cloud or remote data center.

In simple terms:

- Cloud Computing → Data is sent to distant servers for processing

- Edge Computing → Data is processed near the source (device, router, or local server)

For example, instead of a smart camera sending all video footage to the cloud, edge computing allows the camera (or a nearby device) to analyze the video locally and send only important results.

This approach makes systems faster, more secure, and more efficient.

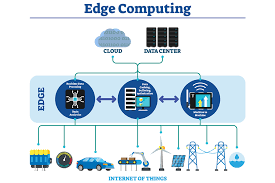

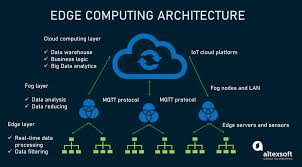

How Does Edge Computing Work?

Edge computing works through a simple process:

1. Data Collection

Data is generated by:

- Smartphones

- IoT sensors

- Smart cameras

- Wearable devices

- Industrial machines

2. Local Processing

Instead of sending raw data to the cloud, processing happens at:

- The device itself

- A nearby edge server

- A local network gateway

3. Cloud Synchronization

Only important or summarized data is sent to the cloud for:

- Storage

- Advanced analytics

- Backup

This method reduces delay, bandwidth usage, and cloud costs.

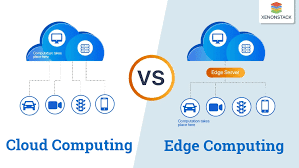

Edge Computing vs Cloud Computing

Many beginners ask whether edge computing will replace cloud computing. The answer is no. They work together.

| Feature | Edge Computing | Cloud Computing |

| Data location | Near the source | Centralized servers |

| Speed | Very fast | Slight delay |

| Internet dependency | Low | High |

| Security | Higher | Depends on network |

| Cost efficiency | Lower bandwidth cost | Ongoing cloud fees |

👉 The best systems use both edge and cloud computing together.

Why Is Edge Computing Important?

Edge computing exists because modern applications demand speed, reliability, and security.

1. Low Latency Requirements

Applications such as:

- Online gaming

- Autonomous vehicles

- Real-time video analytics

cannot wait for cloud responses.

2. Explosion of IoT Devices

Billions of connected devices generate massive amounts of data every second. Sending all of it to the cloud is inefficient.

3. Improved Security & Privacy

Processing data locally reduces exposure to cyberattacks and data leaks.

4. Bandwidth Optimization

Edge computing significantly reduces internet data usage.

Real-World Examples of Edge Computing

🚗 Autonomous Vehicles

Self-driving cars analyze sensor data locally to make instant decisions like braking or steering.

🏥 Healthcare Systems

Medical devices monitor patients in real time and alert doctors immediately when issues arise.

🏭 Smart Manufacturing

Machines detect faults early, reducing downtime and maintenance costs.

🏠 Smart Homes

Smart cameras, voice assistants, and security systems respond instantly without relying entirely on the cloud.

Advantages of Edge Computing

Edge computing offers several major benefits:

- ⚡ Faster response times

- 🔐 Enhanced data security

- 💰 Reduced cloud and bandwidth costs

- 📉 Lower network congestion

- 🌍 Reliable performance in poor internet areas

These advantages make it ideal for modern digital applications.

Disadvantages of Edge Computing

Despite its benefits, edge computing has some challenges:

- Initial hardware investment

- Complex management across multiple devices

- Security maintenance at the edge level

However, these challenges are decreasing as technology improves.

Edge Computing in 2025 and Beyond

By 2025, edge computing is expected to become:

- A core component of 5G and future 6G networks

- Essential for real-time AI applications

- A foundation for smart cities and smart businesses

Experts predict that most data processing will happen at the edge, while the cloud will focus on storage and large-scale analytics.

Who Should Learn About Edge Computing?

Edge computing is valuable knowledge for:

- Website owners

- Bloggers and content creators

- Digital marketers

- Tech students

- Online entrepreneurs

Understanding this technology can open doors to new business and career opportunities.

Final Thoughts

Edge computing is transforming how data is processed and delivered. By bringing computation closer to the source, it offers speed, security, and efficiency that traditional cloud-only systems cannot provide.

As digital technologies continue to evolve, edge computing will play a critical role in shaping the future of the internet, smart devices, and online businesses. Learning about it today gives you a strong advantage for tomorrow.

what is edge computing,

edge computing explained,

edge computing for beginners,

edge computing vs cloud computing,

edge computing examples,

edge computing benefits,

Frequently Asked Questions (FAQ)

What is edge computing in simple terms?

Edge computing is a technology that processes data close to where it is generated instead of sending it to distant cloud servers.

Is edge computing expensive?

Edge computing may have higher initial costs, but it reduces long-term cloud and bandwidth expenses.

Why is edge computing important in 2025?

Edge computing is important because it supports real-time applications, AI, IoT devices, and faster internet services.